Evaluate Training Effectiveness: Key Metrics and Strategies

Measuring Training Effectiveness: Evaluating Impact and ROI for Business Outcomes

Training effectiveness shows whether learning interventions actually change knowledge, behaviour and measurable business results — and whether learning spend delivers value. Organisations measure effectiveness to confirm competence, reduce operational risk, raise performance and keep audit-ready records that meet standards such as ISO 9001 and ISO 27001. This guide sets out the leading evaluation models, practical methods, links to compliance, ROI approaches and the KPIs and governance you need to make measurement reliable and defensible for auditors and executives. You’ll get step‑by‑step advice on selecting models, designing surveys and assessments, collecting audit evidence, navigating attribution challenges and presenting ROI in stakeholder‑friendly formats. Practical EAV tables, checklists and quick‑reference lists help L&D teams and auditors compare methods, choose KPIs and prepare certification evidence.

What Are the Key Models for Measuring Training Effectiveness?

Evaluation models give structure to turning learning activity into evidence of impact — they make clear what to measure and how to interpret results. The most common frameworks are Kirkpatrick’s four levels and the Phillips ROI model, each serving a different purpose from immediate reaction to monetised business value. Pick Kirkpatrick when you need staged evidence of learning and behaviour change; choose Phillips when leaders require a monetary ROI. The sections below explain how each model works and offer practical steps to apply them in corporate and audit settings, including the type of evidence auditors will expect at each level.

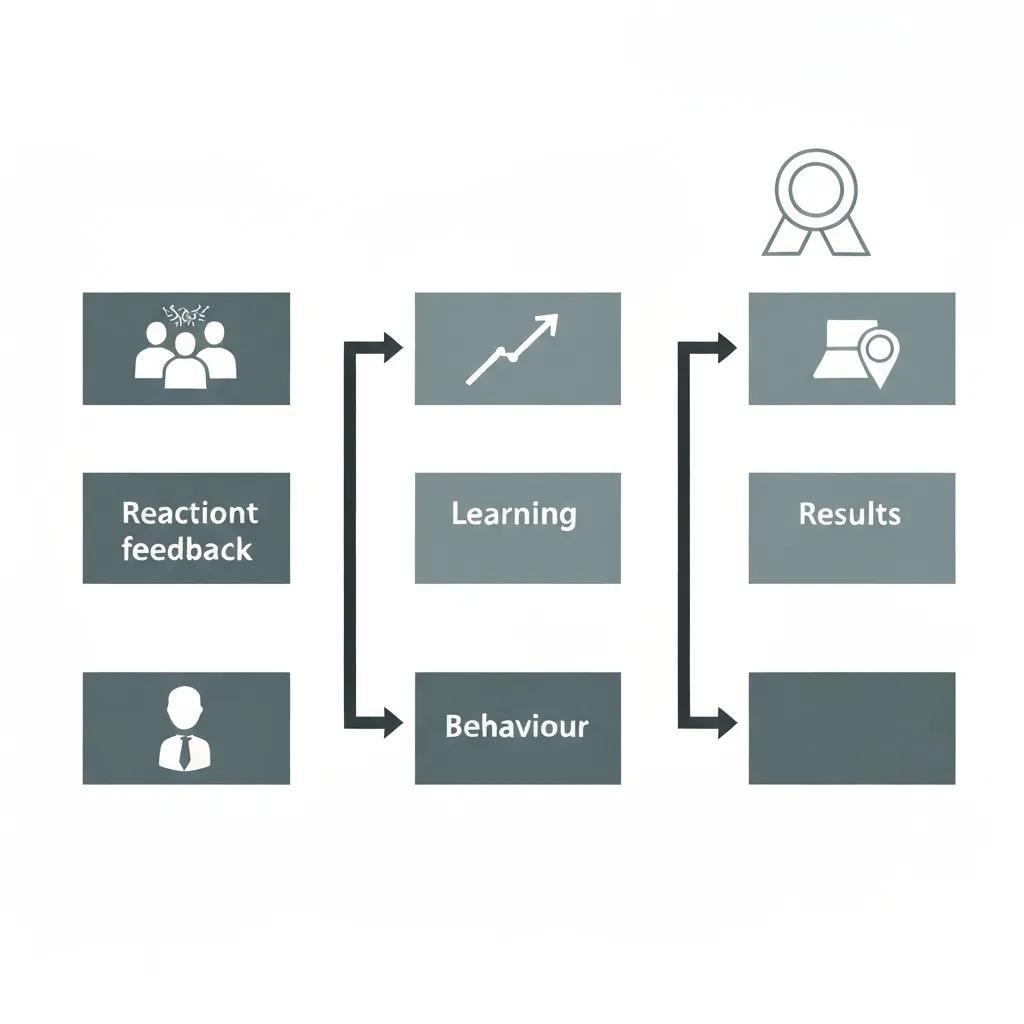

How Does the Kirkpatrick Model Assess Training Impact?

The Kirkpatrick Model evaluates training across four levels — Reaction, Learning, Behaviour and Results — creating a clear evidence chain from participant feedback to business outcomes. Level 1 (Reaction) captures immediate responses with surveys and sentiment scores that indicate engagement and perceived relevance. Level 2 (Learning) relies on objective pre‑ and post‑training assessments to demonstrate knowledge or skill gain — essential where competence clauses (for example ISO 9001) apply. Level 3 (Behaviour) uses structured observations, performance records and manager feedback to show transfer to the job. Level 4 (Results) connects learning to operational KPIs such as error rates, throughput or customer satisfaction. Tools like observation rubrics and assessment scorecards help translate Kirkpatrick levels into audit‑ready records and make the chain of evidence explicit for auditors and stakeholders.

What Is the Phillips ROI Model and How Does It Quantify Training Value?

The Phillips ROI Model extends Kirkpatrick with a fifth level — ROI — that converts training‑attributable business results into a monetary value and compares that with training cost. The approach isolates training impact, converts outcome changes into money and calculates ROI as (Net Benefits / Training Costs) × 100. Reliable implementation needs baseline KPIs, comparison or control groups where feasible, and validated conversion factors to monetise outcomes like productivity gains or avoided error costs. A worked example typically measures pre/post changes (for example error rate), converts the reduction to cost saved, subtracts training costs and divides by total cost to produce an ROI percentage. Good governance — documenting assumptions and methods — is essential so the calculation stands up to auditor and stakeholder scrutiny.

Measuring Training Impact and ROI: Proven Approaches

Practical methods turn models into repeatable activities that produce evidence for L&D teams and auditors. Common approaches include surveys, assessments, observations, interviews and KPI tracking. Match methods to evaluation levels: surveys and reaction forms for Level 1; tests and simulations for Level 2; structured observations and performance data for Level 3; and business KPI monitoring for Level 4 and ROI. Strong programmes combine methods to triangulate results and improve attribution, while keeping data collection proportionate for SMEs and large organisations. The table below compares common methods so teams can pick the right mix for skill, compliance and cost constraints.

Introductory comparison of common practical evaluation methods and when to use them.

| Method | What it measures | Strengths / When to use |

|---|---|---|

| Surveys and feedback | Reaction and perceived learning | Quick, scalable — ideal for Level 1 and qualitative signals |

| Pre- and post-training assessments | Knowledge or skill gain | Direct measure of learning (Level 2); suited to technical competence |

| Structured observations | Behaviour change on the job | Captures Level 3 evidence; useful for procedural and safety tasks |

| Interviews and focus groups | Context, barriers and long-term impact | Explains why change occurred; complements quantitative data |

| KPI tracking and business metrics | Results and financial impact | Direct link to Level 4 and ROI; most effective with baselines and controls |

How to Design Effective Training Surveys and Feedback Forms?

Good survey design starts with a clear measurement objective and a map to evaluation levels — that reduces bias and yields usable evidence. Define the purpose (e.g. reaction, relevance, self‑assessed competence), then pick question types: Likert scales for satisfaction, multiple‑choice for recall, and open text for context. Time surveys appropriately — immediate post‑session surveys capture reaction while follow‑ups at 30–90 days measure perceived behaviour change — and include identifiers so responses can be linked to other data sources. Pilot questions to check clarity and avoid leading language; balance anonymity with the need to connect responses to performance data for audit evidence. Well‑designed surveys give quick Level 1 signals and useful supplementary evidence for Level 3 enquiries.

What Are Pre- and Post-Training Assessments and Their Role in Skill Development?

Pre‑ and post‑training assessments provide objective evidence of learning gain by testing knowledge or skills before and after an intervention — a core Level 2 measure. Effective assessments align to learning objectives, use consistent scoring rubrics and include practical tasks where appropriate. Consider statistical checks (effect size, significance) to strengthen learning claims, while pragmatic metrics such as percentage gain and competency thresholds work well for routine reporting. Link assessment outcomes to development plans and competency records so auditors can see how training met identified needs and led to measurable improvement. Robust pre/post assessment programmes give organisations defensible evidence of skill development tied to job performance.

How Does Measuring Training Effectiveness Support ISO Certification Compliance?

Measuring training effectiveness is central to meeting ISO requirements: standards expect organisations to identify competence needs, provide suitable learning and retain objective evidence that people are competent. Evaluation outputs — training plans, assessment scores, observation records and KPI improvements — form the documentary trail auditors examine under competence and awareness clauses. A structured measurement framework maps records directly to clause requirements, reduces audit friction and demonstrates continuous improvement in competence and awareness. The subsections below summarise specific standard expectations and the types of evidence auditors commonly request.

What Does ISO 9001 Clause 7.2 Say About Training Competence?

ISO 9001 clause 7.2 asks organisations to determine required competence, take actions (training or otherwise), evaluate effectiveness and retain appropriate records. Auditors typically look for documented needs analyses, role‑based training plans, assessment results and evidence of competence. Useful evidence includes competency matrices, documented training plans, pre/post assessment scores, observation rubrics and manager sign‑offs on competence. Simply delivering training is not enough — organisations must show interventions produced demonstrable improvement and that improvements were tracked against defined competency criteria. A clear chain — needs → intervention → assessment → competence record — aligns measurement to the clause and eases auditor verification.

How Does ISO 27001 Ensure Information Security Through Training and Awareness?

ISO 27001 requires personnel to be aware of ISMS policies, their security responsibilities and to receive suitable role‑based training. Evidence of effectiveness can include attendance logs, phishing simulation results, awareness survey scores and role‑specific assessments. Measurement should focus on behaviour change that lowers information risk — for example, reduced phishing click rates, improved secure handling in observations or fewer incidents linked to human error. Role‑targeted training records and follow‑up assessments give auditors direct evidence of information security competence. Linking awareness activity to incident metrics and corrective actions strengthens the case that training reduces risk rather than just increasing knowledge.

Stratlane Certification Ltd. provides ISO certification audit services to help organisations validate training and competence evidence for audit purposes. As an innovative certification body combining experienced auditors with AI‑enabled tools, Stratlane focuses on ISO 9001, ISO 27001 and ISO 42001 compliance. Their process maps training programmes to clause requirements, highlights weak evidence and recommends remediation so organisations are audit‑ready. Organisations in London and beyond can use this service to convert training measurement outputs into the records auditors expect and to request a quote or book an audit aligned to certification objectives.

What Business Benefits and ROI Can Be Achieved Through Effective Training Measurement?

Measuring training effectiveness turns learning activity into quantifiable business outcomes — productivity gains, quality improvements, lower error costs and better customer experience — so leaders can act on evidence rather than instinct. Quantifying benefits means mapping learning outcomes to operational KPIs, translating KPI changes into monetary terms and attributing change to training using control comparisons or validated contribution analysis. The table below maps common benefits to metrics and gives pragmatic quantification hints useful for ROI calculations and executive reporting.

Introductory table mapping training benefits to measurable KPIs and practical quantification hints.

| Benefit | Metric / KPI | How to quantify |

|---|---|---|

| Productivity gain | Output per labour hour | Compare baseline and post‑training output; multiply the gain by labour cost per hour |

| Error reduction | Defect rate or rework costs | Calculate avoided costs from fewer defects and sum over the review period |

| Customer satisfaction | CSAT / NPS | Convert improved retention or reduced churn into revenue impact using customer lifetime value |

| Time-to-competence | Days to proficiency | Value reduced onboarding time as salary savings and faster billable contribution |

| Compliance risk reduction | Incident frequency | Estimate avoided incident costs and potential regulatory penalties |

How Does Effective Training Improve Employee Performance and Retention?

Effective training closes skill gaps, clarifies expectations and enables people to hit targets more consistently; when linked to career pathways it also boosts engagement and reduces turnover and hiring costs. Track performance appraisal scores, productivity metrics, error or incident rates and voluntary turnover for trained versus untrained cohorts. Attribution techniques — matched cohorts, phased rollouts and time‑series analysis — help separate training effects from other organisational changes and strengthen the business case. Reporting should combine operational KPIs with human outcomes such as retention and engagement to show how training drives sustained performance improvements.

How Can Organisations Prove Learning and Development ROI to Stakeholders?

Proving ROI needs a concise, evidence‑based narrative that summarises inputs, validated outcomes and the ROI calculation with assumptions clearly documented. A one‑page ROI summary usually includes the training objective, costs, baseline KPI, measured improvement, conversion to monetary benefit, net benefit and ROI percentage, plus sensitivity notes on key assumptions. Simple visuals — before/after KPI charts and a short assumptions table — let executives and auditors assess rigour quickly. Include governance details — who ran the analysis, data sources and control methods — to increase credibility; a soft external audit can further validate assumptions and help stakeholder acceptance.

Stratlane Certification Ltd.’s audit process is an example of how external review can reveal training gaps that, when addressed, deliver measurable ROI and documented business benefit. Their AI‑enabled audits flag weak evidence, recommend targeted measurement techniques and help organisations present succinct, transparent ROI narratives to stakeholders.

What Are Common Challenges in Measuring Training Effectiveness and How Can They Be Overcome?

Typical challenges include attribution (isolating training impact from other variables), inconsistent data and integration, time‑lags between training and business outcome, and limited evaluation capability in many L&D teams. Address these with mixed‑methods designs, baseline data collection, clear governance allocating roles and responsibilities, and simple experimental approaches such as phased rollouts or control groups. Build basic analytical capability for statistical checks and ensure HR and operations systems can share identifiers to link learning to performance. The list below summarises common pitfalls and recommended mitigations for programme owners.

- Attribution ambiguity: Use control groups or phased implementation to isolate training effects.

- Poor data quality: Set data standards and validation rules for assessments and performance metrics.

- Long time-lags: Define intermediate indicators and track leading KPIs while waiting for final outcomes.

- Lack of governance: Assign evaluation ownership, set reporting cadences and clarify decision rights to sustain rigour.

Why Do Many Organisations Struggle to Link Training to Business Outcomes?

Organisations often lack baseline metrics, fail to align learning objectives with operational KPIs and don’t plan measurement into interventions — all of which undermine attribution. Confounding changes (process updates, market shifts, restructures) make causal claims harder unless evaluations account for them. L&D teams may also lack access to the right data or the analytical skills for contribution analysis. A simple diagnostic checklist — confirm baseline availability, alignment of objectives to KPIs and presence of a control or comparison group — helps pinpoint where linkage is failing in your context.

What Strategies Improve Accuracy and Usefulness of Training Evaluation?

Accuracy improves when you use mixed methods that combine quantitative and qualitative data, apply experimental or quasi‑experimental designs, establish clear baselines and use consistent identifiers to join learning and performance data. Governance matters: appoint a measurement lead, fix reporting cadences, document assumptions and require peer review of ROI and attribution statements. Practical steps include using validated assessment tools, sampling for observations, triangulating across sources and documenting limitations openly. These practices produce evidence that supports decision‑making and stands up to auditors and stakeholders.

Which Employee Development Metrics and KPIs Best Reflect Training Impact?

Choose KPIs by mapping desired outcomes to measurable indicators that are available with suitable quality and cadence; the best KPIs cover learning gain, behavioural transfer and business results. Key categories include completion and certification rates, learning gain (assessment scores), on‑the‑job performance metrics (productivity, error reduction) and human‑capital outcomes such as retention and engagement. The table below gives operational definitions, recommended data sources and reporting cadence to help build an audit‑ready KPI dashboard.

Introductory KPI reference table for training impact, with definitions and data sources.

| KPI | Definition | Data source / Frequency |

|---|---|---|

| Completion rate | Percentage of enrolled learners who finish required training | LMS reports / Monthly |

| Learning gain | Average improvement from pre‑ to post‑assessment scores | Assessment platform / Per course |

| Performance change | Change in role‑specific KPIs (e.g. output per hour) | HRIS / Ops systems / Quarterly |

| Error rate reduction | Decrease in defects or incidents linked to trained cohort | Quality / incident system / Monthly |

| Time-to-competence | Days until a new or moved employee reaches target performance | Onboarding records / Quarterly |

What Key Performance Indicators Should Be Tracked for Training Success?

Operational KPI choice should prioritise relevance, data availability and feasibility of attribution. Track completion, learning gain, behaviour measures and business KPIs that training plausibly influences. Each KPI needs a clear calculation, an owner and a reporting frequency — for example, learning gain = (post‑score − pre‑score) / pre‑score, owned by L&D with assessment data stored centrally. Combine short‑term indicators (completion, scores) with medium‑term measures (behaviour change) and long‑term outcomes (productivity, retention) to create a layered view for operations and audit. Present KPI trends with documented methods and limitations so stakeholders can interpret results correctly and audits proceed smoothly.

How Can Behavioural Change and Learning Outcomes Be Measured Effectively?

Measure behavioural change with structured observations, manager assessments, 360 feedback and performance metrics tied to specific competencies; use rubrics and standardised scoring to improve reliability. An observation rubric should define behaviour anchors, rating scales and required evidence for each score, and observers should be trained to ensure inter‑rater consistency. Triangulate observational data with performance indicators and assessment scores, and keep records that link individual assessments to training activities and follow‑up actions. Consistent evidence collection supports continuous improvement and provides auditors what they need to verify competence and sustained behaviour change.

Highlight your ISO certification needs and expertise to attract businesses that want audit support, leading to quote requests or audit bookings.

Frequently Asked Questions

What are the benefits of measuring training effectiveness for organisations?

Measuring training effectiveness reveals what works and what doesn’t. It helps identify skill gaps, improve employee performance and increase productivity. By quantifying ROI, organisations make better decisions about future investment. Measurement also supports ISO and other compliance requirements by providing traceable evidence of competence. Overall, it promotes accountability and a cycle of continuous improvement.

How can organisations ensure the reliability of their training evaluation data?

Reliability comes from clear data standards and validation processes. Use consistent measurement tools, collect data from multiple sources to triangulate findings and assign data ownership. Regularly review methods and update them based on feedback and changing needs. Simple governance — roles, responsibilities and quality checks — makes evaluation data trustworthy.

What role does employee feedback play in evaluating training effectiveness?

Employee feedback gives valuable context on relevance and experience. Surveys, interviews and focus groups surface barriers, attitudes and suggestions that quantitative data can’t explain. Qualitative feedback complements assessment scores and helps you refine content and delivery. Involving learners also increases engagement and buy‑in for future programmes.

How can organisations overcome challenges in linking training to business outcomes?

Start with a structured evaluation plan: collect baseline data, align learning objectives to business KPIs and, where possible, use control or comparison groups. Communicate closely with business units so KPIs and timing align. Use phased rollouts or matched cohorts to strengthen attribution and document assumptions clearly.

What are some common pitfalls in training evaluation, and how can they be mitigated?

Common pitfalls include poor data quality, missing baselines and weak alignment between training and business goals. Mitigate these by setting data standards, running needs analyses before interventions and embedding continuous feedback loops. Invest in basic evaluation skills and ensure systems can join learning and performance data.

How can organisations effectively communicate training ROI to stakeholders?

Keep ROI communication concise and evidence‑based. Use a one‑page summary with objective, costs, baseline KPI, measured improvement, monetary conversion, net benefit and ROI percentage, plus key assumptions. Use simple visuals and list data sources and governance steps so executives and auditors can quickly assess rigour and credibility.

Conclusion

Measuring training effectiveness is essential for organisations that want better performance and to meet compliance obligations. Clear measurement turns learning into evidence — showing improvements in productivity, quality and engagement — and supports data‑driven decision making. Robust evaluation methods also make ISO audits simpler by providing a transparent record of competence and improvement. If you need help refining your measurement approach, our services can support practical, audit‑ready solutions tailored to your goals.